Importing data into PostgreSQL is a crucial task for many dataprofessionals:

Whether you’re migrating from another database system, loading data from external sources, or restoring backups, understanding the various techniques and best practices is essential for a smooth and efficient import process. In this comprehensive guide, we’ll explore the fundamentals of importing data into PostgreSQL, covering data preparation, supported formats, import methods, and troubleshooting tips.

PostgreSQL Import 101: Understanding the Basics

Before diving into the specifics of importing data into PostgreSQL, it’s important to grasp the basics and lay a solid foundation for success.

The Importance of Data Preparation and Validation

One of the most critical steps in the data import process is proper data preparation and validation. Before attempting to import any data into PostgreSQL, ensure that your data is clean, consistent, and well-structured. This involves:

- Checking for missing or null values

- Validating data types and formats

- Removing any inconsistencies or duplicates

- Ensuring data integrity and referential constraints

By thoroughly preparing and validating your data beforehand, you can minimize potential issues during the import process and ensure data accuracy and reliability.

Supported Data Formats in PostgreSQL

PostgreSQL supports a wide range of data formats for importing data, providing flexibility and compatibility with various data sources. Some of the commonly used data formats include:

- SQL Dumps: SQL dump files (.sql) contain a series of SQL statements that can be executed to recreate the database structure and populate it with data. These files are often used for database backups and migrations.

- CSV Files: Comma-Separated Values (CSV) files are plain text files that store tabular data, with each line representing a row and commas separating the values. PostgreSQL can easily import data from CSV files using the COPY command.

- JSON and JSONB: PostgreSQL supports importing data in JSON (JavaScript Object Notation) format, which is a lightweight data interchange format. The JSONB data type provides efficient storage and querying capabilities for JSON data.

- XML: PostgreSQL also supports importing data in XML (eXtensible Markup Language) format, allowing you to store and query hierarchical data structures.

Understanding the supported data formats enables you to choose the most suitable format based on your data source and requirements.

Overview of PostgreSQL Import Methods

PostgreSQL offers several methods for importing data, catering to different scenarios and preferences. The most common import methods include:

- Command Line: PostgreSQL provides command-line tools like psql and pg_restore that allow you to import data directly from the terminal. These tools are particularly useful for scripting and automating import tasks.

- pgAdmin: pgAdmin is a popular graphical user interface (GUI) tool for managing PostgreSQL databases. It provides a user-friendly interface for importing data through its import/export functionality.

- SQL Statements: You can use SQL statements like COPY and INSERT to load data into PostgreSQL tables. These statements offer fine-grained control over the import process and allow you to specify data transformations and mappings.

Familiarizing yourself with these import methods will help you choose the most appropriate approach based on your specific requirements and the complexity of your data.

Importing SQL Dumps into PostgreSQL

SQL dumps are a common way to backup and restore PostgreSQL databases. They contain a series of SQL statements that can recreate the database structure and populate it with data. Let’s explore the process of importing SQL dump files into a PostgreSQL database.

Step-by-Step Guide

- Create a new database or select an existing one where you want to import the SQL dump.

- Open a terminal or command prompt and navigate to the directory where the SQL dump file is located.

- Use the psql command-line tool to import the SQL dump file:

Copy code

psql -U username -d database_name -f dump_file.sql

Replace username with your PostgreSQL username, database_name with the target database, and dump_file.sql with the path to your SQL dump file. - Enter the password for the specified user when prompted.

- The SQL statements in the dump file will be executed, creating the necessary tables, indexes, and other database objects, and populating them with data.

Advantages and Limitations of SQL Dumps

SQL dumps offer several advantages for data import:

- They provide a complete backup of the database structure and data.

- They are easy to create and restore using standard PostgreSQL tools.

- They allow for selective import of specific tables or objects.

However, there are also some limitations to consider:

- SQL dumps can be large in size, especially for databases with a significant amount of data.

- Importing large SQL dumps can be time-consuming and resource-intensive.

- SQL dumps may not be suitable for incremental updates or synchronization scenarios.

Tips for Optimizing SQL Dump Imports

To optimize the import process and handle large SQL dump files efficiently, consider the following tips:

- Use the –single-transaction flag with pg_dump to ensure data consistency during the export process.

- Compress the SQL dump file using tools like gzip to reduce its size and speed up the import process.

- Consider using parallel restore techniques, such as splitting the dump file into smaller chunks and importing them concurrently.

- Disable indexes and constraints temporarily during the import process to improve performance, and re-enable them afterward.

- Monitor the import progress and resource utilization to identify any bottlenecks or performance issues.

By following these tips and best practices, you can streamline the SQL dump import process and ensure a smooth and efficient data migration.

Alternative Approach: Import PostgreSQL Data in Seconds From Your Spreadsheet

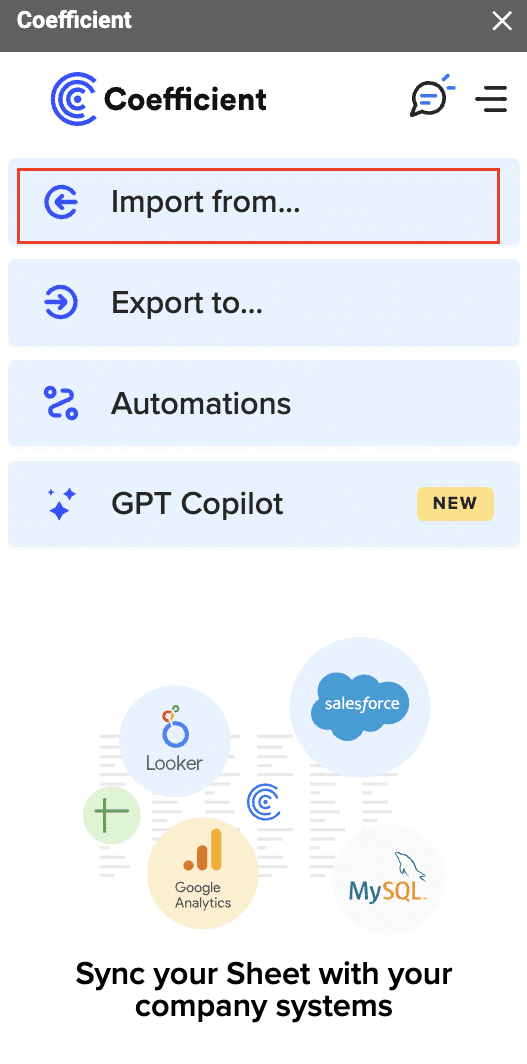

While PostgreSQL’s builttools are useful, they often fall short for data teams needing up-to-the-minute insights. Coefficient fills this gap, offering a direct link between your PostgreSQL database and your spreadsheets.

- Real-Time Data Access: Coefficient syncs live data from PostgreSQL directly into Excel and Google Sheets, ensuring your reports always reflect the most up-to-date information. This eliminates the need for manual exports and imports, saving time and reducing the risk of working with outdated data.

- Automated Reporting: Build reports and dashboards that refresh automatically, freeing up your team’s time to focus on analysis rather than data preparation. This automation reduces the potential for human error and ensures consistency in your reporting processes.

- Enhanced Data Governance: Coefficient’s enterprise-grade security and access controls allow you to give teams access to source-of-truth data while maintaining control. This balance between accessibility and security is crucial for data teams managing sensitive information across various departments.

How Coefficient Works with PostgreSQL

Coefficient offers three ways to bring PostgreSQL data into your spreadsheets:

- Tables & Columns Import: Choose specific tables and columns to import.

- Custom SQL Query Import: Write SQL queries for more complex data pulls.

- GPT SQL Builder: Use plain English to generate SQL queries.

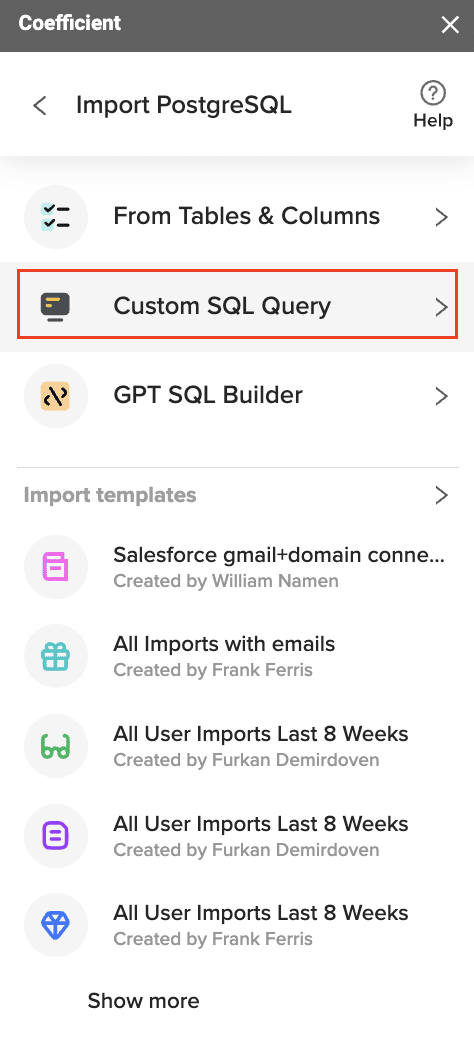

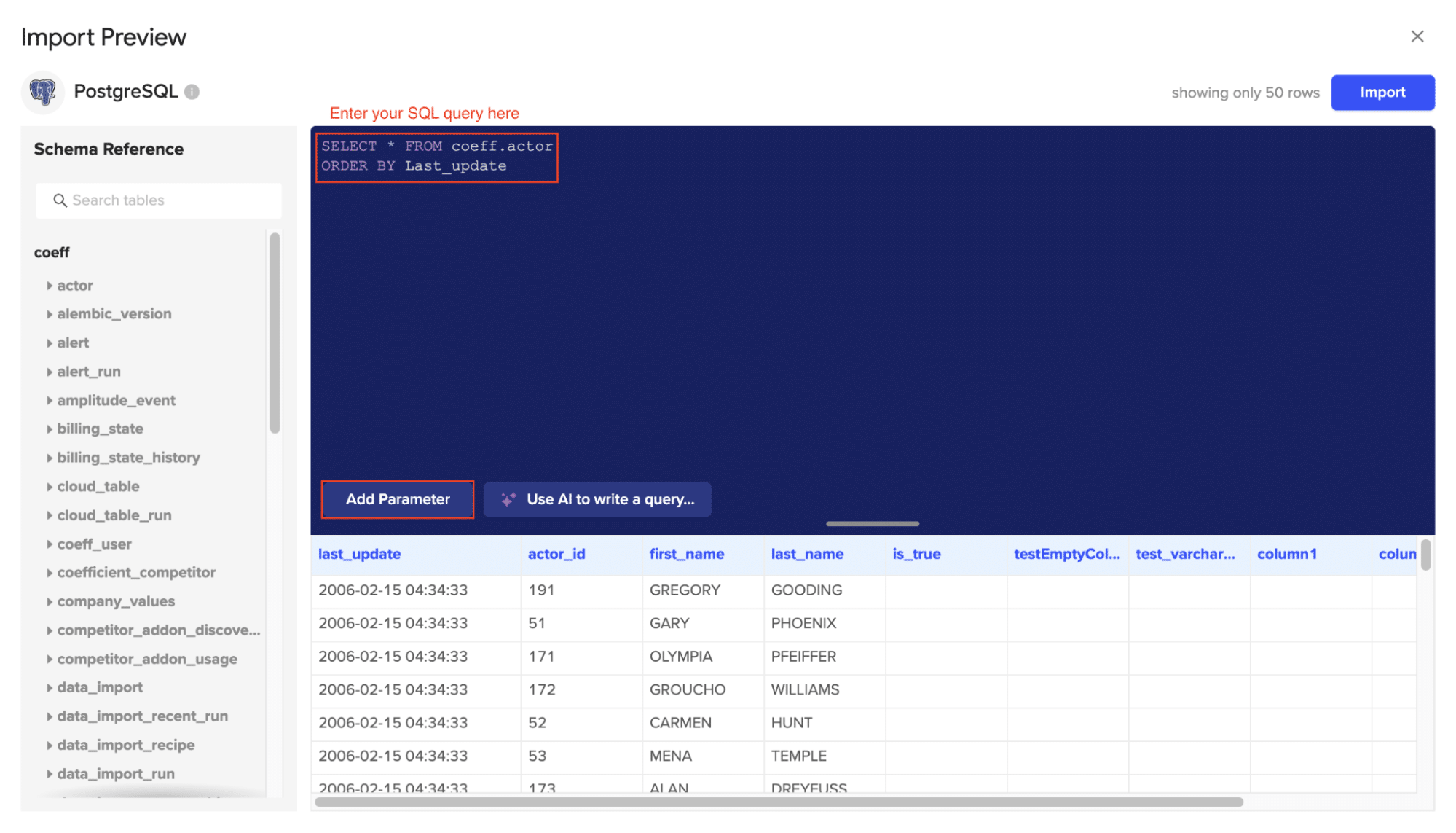

Let’s explore the Custom SQL Query Import method, which offers maximum flexibility for data teams:

Open Coefficient’s sidebar and select “Import from.”

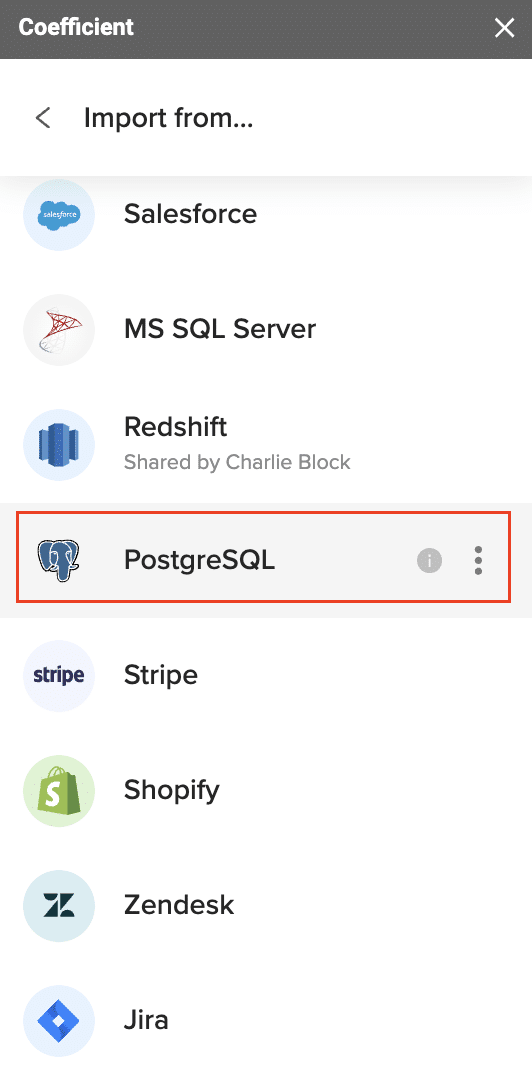

Select “PostgreSQL” from the list.

Choose “Custom SQL Query.”

Write your SQL query in the provided window.

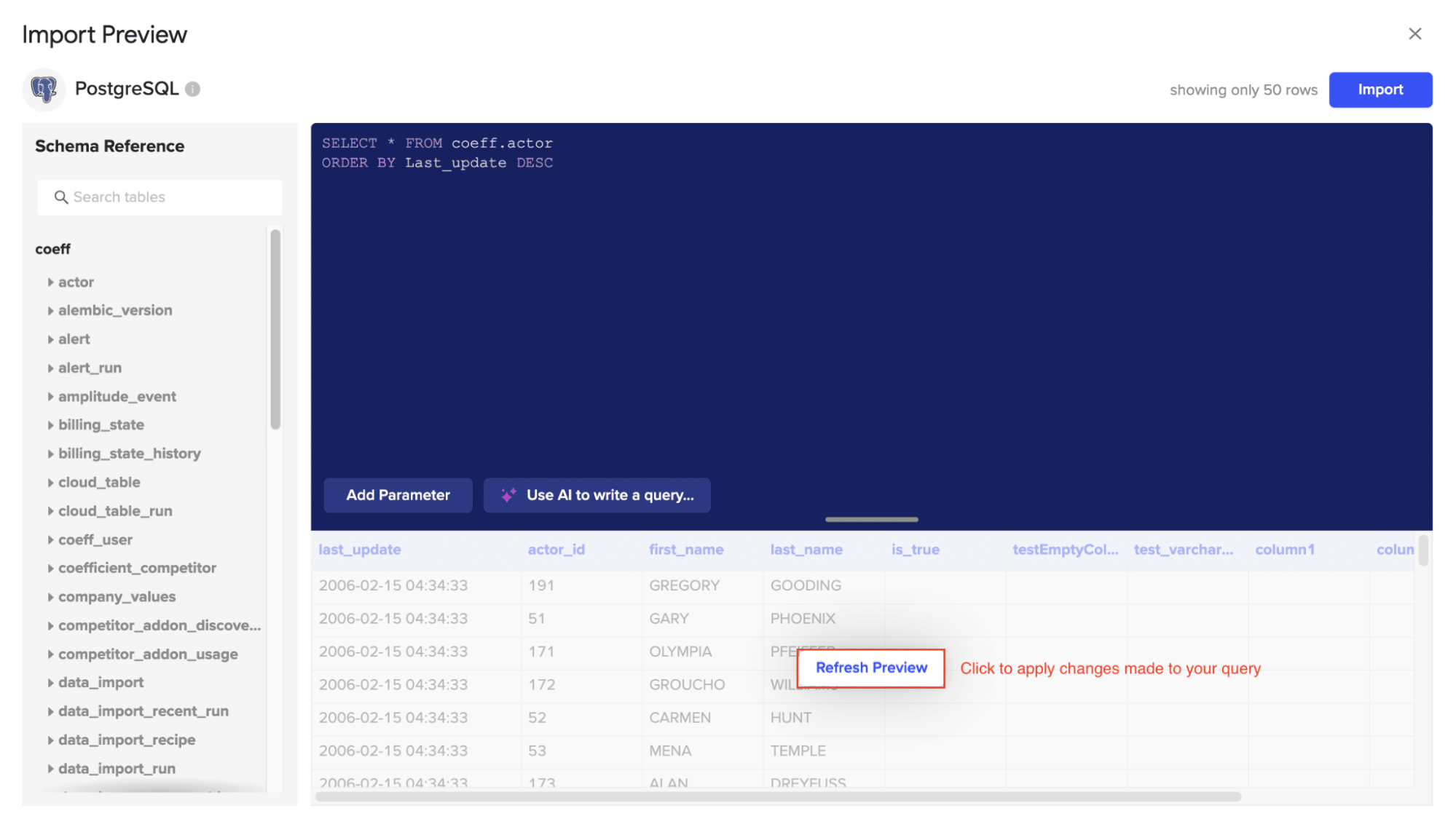

Click “Refresh Preview” to check your results before you import your data

Click “Import.”

Your PostgreSQL Custom SQL import will automatically populate your spreadsheet in a few seconds!

Optimizing and Troubleshooting PostgreSQL Imports

Best Practices for PostgreSQL Imports

Use COPY for bulk imports: The COPY command is significantly faster than INSERT for large datasets. Example:

COPY table_name FROM ‘/path/to/file.csv’ WITH (FORMAT csv, HEADER true);

Disable constraints and indexes: Temporarily disable constraints and indexes during large imports to improve performance. Re-enable them after the import:

sql

Copy

ALTER TABLE table_name DISABLE TRIGGER ALL;

— Import data

ALTER TABLE table_name ENABLE TRIGGER ALL;

Increase work_mem: Adjust the work_mem parameter for complex sorts and hash operations:

SET work_mem = ‘256MB’;

Troubleshooting Common PostgreSQL Import Issues

Data Conflicts: When importing data into a table with unique constraints, such as a primary key or unique index, you may encounter conflicts if the incoming data contains duplicate values. To resolve this, you can either update the existing records, skip the conflicting rows, or use a “upsert” (update/insert) strategy to handle the conflicts.

Referential Integrity Violations: If your PostgreSQL tables have foreign key constraints, the import process may fail if the referenced data is not present in the target tables. To address this, you can either ensure the necessary parent data is imported first, or temporarily disable the foreign key constraints during the import and re-enable them afterward.

Performance Bottlenecks: Large data imports can sometimes lead to performance issues, such as slow query execution or high resource utilization. In these cases, you may need to optimize your PostgreSQL configuration, such as adjusting the work_mem or maintenance_work_mem parameters, or implementing partitioning or indexing strategies to improve query performance.

By understanding these common issues and having a plan to address them, you can ensure your PostgreSQL import processes are reliable, efficient, and scalable.

Streamline Postgres Imports with Coefficient

Properly managing PostgreSQL data imports is essential for ensuring the integrity, accessibility, and usefulness of your data. By following best practices for optimization and troubleshooting, as well as leveraging tools like Coefficient to integrate your PostgreSQL data into spreadsheet workflows, you can streamline your data management processes and unlock the full potential of your PostgreSQL data.

To get started with Coefficient and start importing your PostgreSQL data into Google Sheets or Excel, visit https://coefficient.io/get-started and sign up for a free trial. The Coefficient team is also available to provide further assistance and guidance on optimizing your PostgreSQL data management and integration strategies.