Migrating data from BigQuery to PostgreSQL can be a complex process, but it’s often necessary for businesses looking to optimize their data management strategies.

This guide will walk you through the process of migrating from BigQuery to PostgreSQL, covering common challenges, benefits, and top migration methods.

BigQuery to PostgreSQL: Why Migrate and Key Considerations

BigQuery and PostgreSQL are both powerful database management systems, but they serve different purposes. BigQuery is a fully-managed, serverless data warehouse solution from Google Cloud, designed for analyzing massive datasets.

PostgreSQL, on the other hand, is an open-source relational database management system known for its reliability, feature robustness, and extensibility.

Reasons for Migrating from BigQuery to PostgreSQL

There are several reasons why you might consider migrating from BigQuery to PostgreSQL:

- Cost considerations: PostgreSQL can be more cost-effective for certain use cases, especially when dealing with smaller datasets or when you need more control over your infrastructure.

- Data control: PostgreSQL offers greater control over database management, allowing for fine-tuned optimizations and customizations.

- Specific workload requirements: Some applications may perform better with PostgreSQL’s relational model and query optimizer.

- Open-source flexibility: PostgreSQL’s open-source nature allows for extensive customization and community support.

Key Considerations Before Migration

Before you begin the migration process, consider the following:

- Data volume and complexity

- Schema differences between BigQuery and PostgreSQL

- Performance requirements

- Budget constraints

- Team expertise in managing PostgreSQL

Common Challenges in Database Migration

Migrating from BigQuery to PostgreSQL comes with several challenges:

Data Type Incompatibilities

BigQuery and PostgreSQL have different data types, which can cause issues during migration. For example, BigQuery’s ARRAY and STRUCT types don’t have direct equivalents in PostgreSQL.

Performance Issues During Large Data Transfers

Transferring large volumes of data can be time-consuming and may impact system performance. It’s crucial to plan for this and consider strategies to minimize downtime.

Maintaining Data Integrity and Consistency

Ensuring that all data is transferred accurately and completely is vital. This includes preserving relationships between tables and maintaining data quality.

Tools like Coefficient can assist in this process by allowing you to create blended reports pulling data from both the old and new databases during the migration, ensuring business continuity and allowing for real-time comparison of data between systems.

Handling Schema Changes and Differences

BigQuery and PostgreSQL have different schema structures. You’ll need to map BigQuery’s schema to PostgreSQL’s relational model, which may require significant restructuring.

Ensuring Minimal Downtime During Migration

Minimizing disruption to your business operations is crucial. Proper planning and execution are necessary to reduce downtime during the migration process.

Top Methods for Migrating BigQuery to PostgreSQL

There are several methods for migrating data from BigQuery to PostgreSQL:

Manual ETL Process

The manual Extract, Transform, Load (ETL) process involves:

- Extract: Export data from BigQuery, typically as CSV or JSON files.

EXPORT DATA

OPTIONS(

uri=’gs://bucket/folder/*.csv’,

format=’CSV’,

overwrite=true,

header=true,

field_delimiter=’,’

) AS

SELECT * FROM `project.dataset.table`;

- Transform: Convert data to fit PostgreSQL schema. This may involve:

- Data type conversions (e.g., BYTES to BYTEA)

- Restructuring nested data

- Handling BigQuery-specific functions

- Load: Import data into PostgreSQL using COPY command or copy meta-command.

COPY table_name FROM ‘/path/to/file.csv’ DELIMITER ‘,’ CSV HEADER;

Pros:

- Full control over the migration process

- No additional tools required

Cons:

- Time-consuming for large datasets

- Prone to human error

- Requires expertise in both systems

Using Google Cloud Data Fusion

Google Cloud Data Fusion is a fully managed, cloud-native data integration service that can help you build and manage ETL pipelines. It supports both BigQuery and PostgreSQL, making it a useful tool for migration.

For more information, check out the official documentation.

Steps:

- Create a new pipeline in Data Fusion

- Add a BigQuery source and PostgreSQL sink

- Configure data mapping and transformations

- Run and monitor the pipeline

Pros:

- Visual interface for building data pipelines

- Managed service with scalability

- Pre-built connectors for both systems

Cons:

- Learning curve for the Data Fusion interface

- Potential additional costs

Leveraging Apache Airflow with BigQueryToPostgresOperator

Apache Airflow is an open-source platform for developing, scheduling, and monitoring batch-oriented workflows. It includes a BigQueryToPostgresOperator that can simplify the migration process.

Apache Airflow is an open-source platform for orchestrating complex workflows.

Steps:

- Set up Airflow environment

- Create a DAG (Directed Acyclic Graph) for the migration

- Use BigQueryToPostgresOperator for data transfer

from airflow import DAG

from airflow.contrib.operators.bigquery_to_postgres import BigQueryToPostgresOperator

with DAG(‘bigquery_to_postgres’, schedule_interval=’@daily’) as dag:

transfer_task = BigQueryToPostgresOperator(

task_id=’transfer_data’,

sql=’SELECT * FROM `project.dataset.table`’,

postgres_conn_id=’postgres_default’,

bigquery_conn_id=’bigquery_default’,

postgres_table=’destination_table’,

dag=dag

)

Pros:

- Highly customizable and extensible

- Can handle complex migration scenarios

- Good for scheduling and monitoring long-running migrations

Cons:

- Requires Airflow setup and maintenance

- Steeper learning curve

Custom Python Scripting

Python provides libraries for interacting with both BigQuery and PostgreSQL, allowing you to create custom migration scripts. Here’s a simple example:

from google.cloud import bigquery

import psycopg2

# Connect to BigQuery

bq_client = bigquery.Client()

# Connect to PostgreSQL

pg_conn = psycopg2.connect(“dbname=your_db user=your_user password=your_password”)

pg_cursor = pg_conn.cursor()

# Execute BigQuery query

query = “SELECT * FROM `your_project.your_dataset.your_table`”

query_job = bq_client.query(query)

# Insert data into PostgreSQL

for row in query_job:

pg_cursor.execute(“INSERT INTO your_table VALUES (%s, %s, %s)”, row)

pg_conn.commit()

pg_cursor.close()

pg_conn.close()

For more complex scenarios, you can find helpful discussions and examples on Stack Overflow.

Pros:

- Complete control over the migration process

- Can handle complex transformations

- Scalable for large datasets with proper optimization

Cons:

- Requires programming skills

- Need to handle errors and edge cases manually

Utilizing Third-party ETL Tools

Tools like Airbyte and Hevo Data offer pre-built connectors for both BigQuery and PostgreSQL, which can streamline the migration process.

Supporting A No-Code Migration with Coefficient

While not a direct migration tool, Coefficient can significantly support your BigQuery to PostgreSQL migration process. It’s a tool that pulls live data from your BigQuery into spreadsheets like Google Sheets or Excel. This makes it easier to examine your data without messing with your live database.

Benefits of Using Coefficient for BigQuery to PostgreSQL Migration

Pre-Migration Data Analysis:

- Use Coefficient to connect to your source database and analyze data structures.

- Create reports on data volume, types, and usage patterns to inform your migration strategy.

Transitional Reporting:

- During the migration, use Coefficient to create blended reports pulling data from both the old and new databases.

- This ensures business continuity and allows for real-time comparison of data between systems.

Post-Migration Validation:

- After migration, use Coefficient’s “Export to..” feature to compare data between the old and new systems.

- Set up automated checks to ensure data integrity and catch any discrepancies.

Data Cleanup and Enrichment:

- Leverage Coefficient to identify and clean up data inconsistencies in the new database.

- Use the “Export to….” feature to push any necessary updates or corrections back to the new database.

Migrating Data from BigQuery to PostgreSQL with Coefficient

Step 1. Connect Both Data Sources

First, ensure Coefficient can access both BigQuery and PostgreSQL.

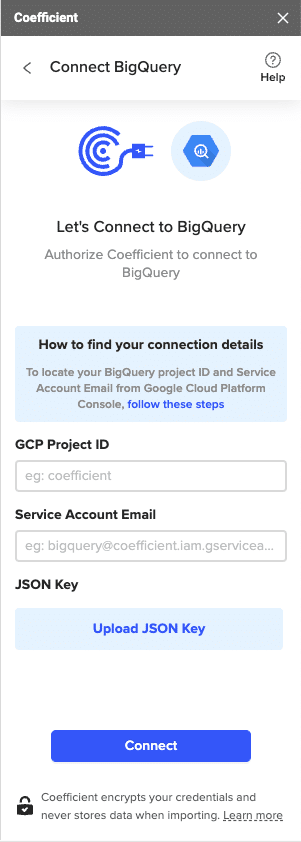

For BigQuery, follow these steps:

- Open the Coefficient sidebar and go to the “Connected Sources” menu.

- Select BigQuery, and provide your GCP Project ID, Service Account Email, and JSON key.

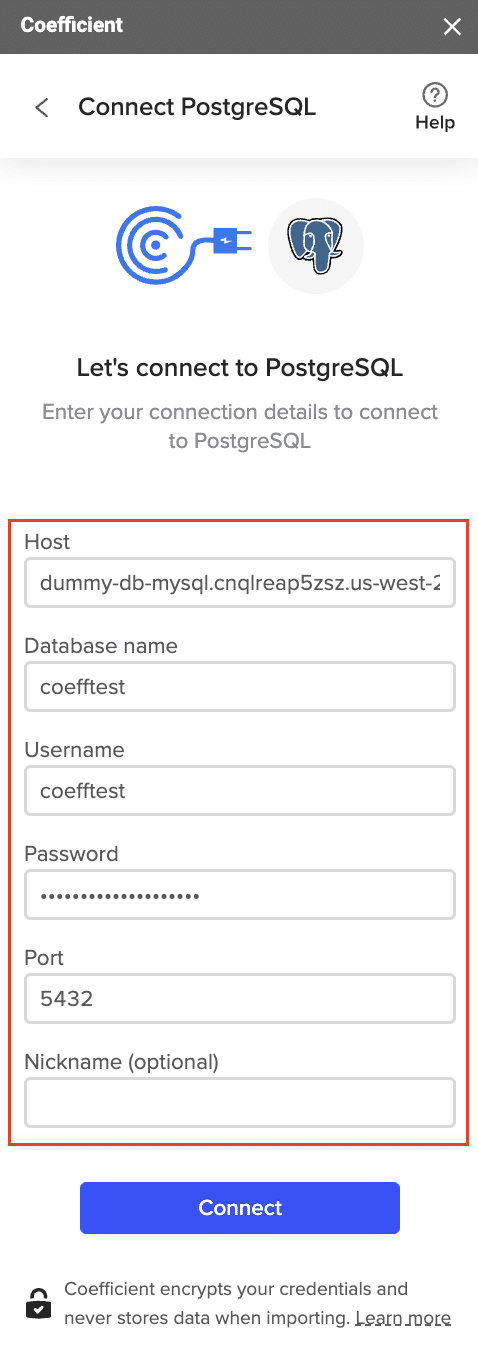

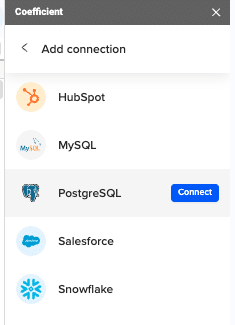

For PostgreSQL:

- Open the Coefficient sidebar and select “Connected Sources”.

- Click “Add Connection”, then “Connect to PostgreSQL”.

- Enter the required fields: Host, Database name, Username, Password, and Port.

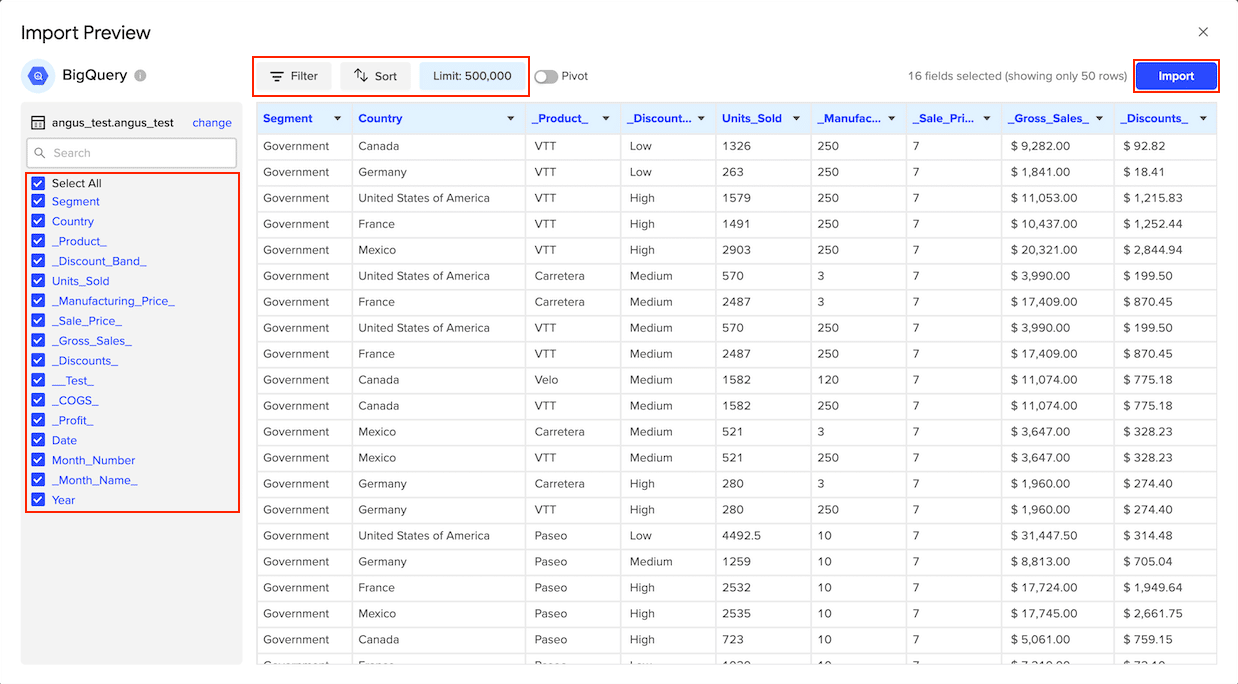

Step 2. Import Data from BigQuery

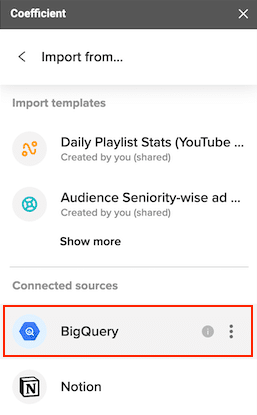

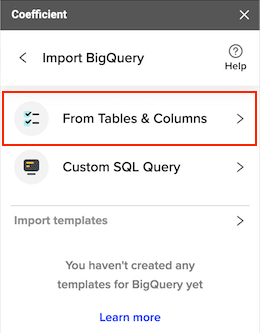

- From the Coefficient sidebar, select “Import from” and choose BigQuery.

- Choose “From Tables/Columns” or “Custom SQL Query” based on your needs.

- Customize your import by selecting the tables, columns, and adding any necessary filters.

Click Import when finished.

Step 3. Prepare Data in Google Sheets/Excel

Once the data is imported, it will appear in your spreadsheet. Review and organize the data to ensure:

- Proper headers are set.

- Data accuracy is verified.

- Necessary transformations are made to fit the right schema.

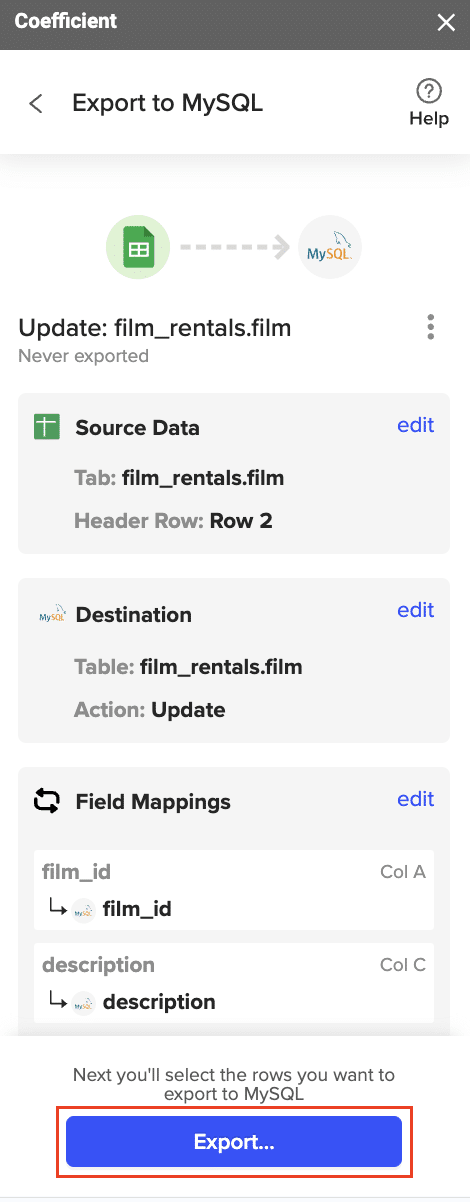

Step 4. Export Data to PostgreSQL

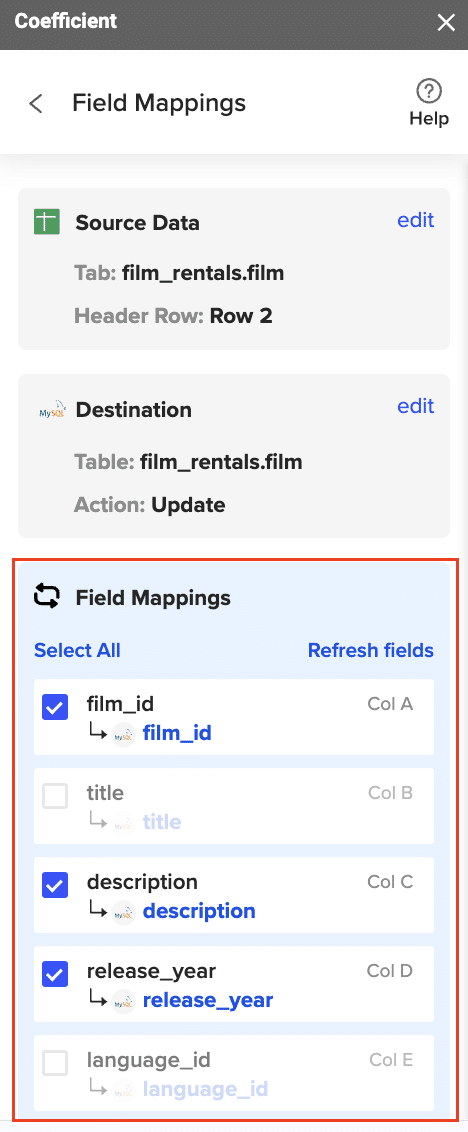

- Choose “Export to” in Coefficient and select PostgreSQL.

- Map the columns from your Google Sheet to the PostgreSQL table fields.

- Specify the appropriate action (Insert, Update, Upsert, or Delete) and confirm your settings.

- Highlight the specific rows in your sheet to export or choose to export all rows, then click “Export” to push the data into PostgreSQL.

When the Export to PostgreSQL is complete (and successful), you can see the number of rows exported/skipped.

For more detailed information on connecting BigQuery to spreadsheets, check out our guide on BigQuery Integration for Google Sheets.

BigQuery to PostgreSQL: Best Practices and Troubleshooting

Best Practices

- Thorough Planning and Assessment

- Conduct a comprehensive audit of your BigQuery data

- Map out all dependencies and downstream applications

- Create a detailed migration plan with clear milestones and rollback points

- Incremental Migration Strategy

- Break the migration into smaller, manageable chunks

- Start with less critical or smaller datasets

- Gradually move to larger and more complex data structures

- This approach minimizes risk and allows for easier troubleshooting

- Data Validation and Testing

- Implement rigorous data validation checks at each stage of the migration

- Use tools like Coefficient to compare data between BigQuery and PostgreSQL

- Perform both automated and manual checks on data integrity, completeness, and accuracy

- Test queries and applications on the migrated data before full cutover

- Performance Optimization

- Analyze and optimize your PostgreSQL schema design

- Implement appropriate indexing strategies based on query patterns

- Use partitioning for large tables to improve query performance

- Consider using materialized views for complex, frequently-used queries

- Handle Large Datasets Efficiently

- For very large datasets, use cloud storage (e.g., Google Cloud Storage) as an intermediate step

- Implement parallel processing techniques for data loading

- Optimize network settings to maximize transfer speeds

- Maintain Business Continuity

- Plan the migration during off-peak hours to minimize disruption

- Set up a temporary dual-write system if possible, writing to both BigQuery and PostgreSQL during transition

- Use tools like Coefficient to create blended reports, ensuring data accessibility during migration

- Security and Compliance

- Ensure data encryption during transfer and at rest

- Review and update access controls in the new PostgreSQL environment

- Document all changes for compliance and audit purposes

- Team Preparation and Training

- Provide training on PostgreSQL for team members familiar with BigQuery

- Update documentation and operational procedures

- Consider engaging PostgreSQL experts for the initial setup and optimization

Troubleshooting Common Issues

- Data Type Mismatches

- Issue: BigQuery and PostgreSQL have different data type systems

- Solution:

- Create a comprehensive data type mapping document

- Pay special attention to complex types like ARRAY and STRUCT in BigQuery

- Use appropriate casting and conversion functions in your migration scripts

- For unsupported types, consider serializing data (e.g., to JSON) or restructuring

- Schema and Constraint Differences

- Issue: PostgreSQL enforces constraints more strictly than BigQuery

- Solution:

- Identify and resolve any data integrity issues in BigQuery before migration

- Temporarily disable constraints during initial data load, then enable and validate

- Use tools like Coefficient to identify data that doesn’t meet PostgreSQL constraints

- Performance Degradation

- Issue: Queries that performed well in BigQuery are slow in PostgreSQL

- Solution:

- Analyze query execution plans using EXPLAIN ANALYZE

- Optimize indexes based on common query patterns

- Consider denormalizing data or creating materialized views for complex queries

- Adjust PostgreSQL configuration parameters (e.g., work_mem, effective_cache_size)

- Connection and Network Issues

- Issue: Timeouts or slow data transfer during migration

- Solution:

- Check and optimize network settings between migration source and destination

- Increase timeout and retry settings in migration tools

- Consider using a dedicated, high-bandwidth network connection for large migrations

- Implement robust error handling and retry logic in custom scripts

- Data Inconsistencies Post-Migration

- Issue: Discrepancies between source and migrated data

- Solution:

- Implement thorough validation scripts to compare source and destination data

- Use Coefficient’s comparison features to identify and visualize differences

- Check for any data transformations or type conversions that might have altered data

- Verify that all data has been migrated, including checking row counts and sampling data

- Handling NULL Values and Default Behaviors

- Issue: Differences in NULL handling between BigQuery and PostgreSQL

- Solution:

- Review and adjust NULL constraints in PostgreSQL schema

- Check for any implicit type conversions that might affect NULL handling

- Adjust application logic if necessary to handle NULLs consistently

- Dealing with BigQuery-Specific Features

- Issue: Loss of BigQuery-specific functionalities (e.g., nested and repeated fields)

- Solution:

- Redesign schema to flatten nested structures where necessary

- Use JSON or JSONB data types in PostgreSQL to maintain complex structures

- Adjust queries and application logic to work with the new data structure

- Managing Long-Running Migrations

- Issue: Extended downtime or inconsistencies during long migrations

- Solution:

- Implement a change data capture (CDC) system to track changes during migration

- Use tools that support resumable transfers in case of interruptions

- Consider a phased migration approach with multiple cutover points

BigQuery to PostgreSQL: Optimizing Your Data Infrastructure

Migrating from BigQuery to PostgreSQL requires careful planning but can offer benefits like cost-effectiveness and increased control. The key to a successful transition lies in maintaining data integrity and minimizing disruption to your operations.

Post-migration, focus on leveraging PostgreSQL’s strengths. Optimize your queries, take advantage of its robust indexing capabilities, and ensure your team is equipped to work with the new system.

Want to maximize the value of your PostgreSQL database? Discover how to connect your data directly to Excel and Google Sheets for efficient analysis and reporting.