Importing data into MySQL is a crucial skill for data professionals.

Whether you’re migrating databases, integrating new data sources, or updating existing records, knowing how to import data into MySQL efficiently can save time and resources.

This guide covers various methods of importing data into MySQL.We’ll explore step-by-step instructions, best practices, and performance optimization tips for each method.

Let’s dive in!

MySQL Workbench Import Wizard: Your Go-To GUI Solution

The MySQL Workbench Import Wizard offers a user-friendly graphical interface for importing data into MySQL databases. This method is particularly useful for those who prefer visual tools over command-line interfaces.

Step-by-step guide to using the MySQL Workbench import wizard:

- Open MySQL Workbench and connect to your database.

- Select the target schema in the Navigator pane.

- Click “Server” in the top menu, then choose “Data Import.”

- Select “Import from Self-Contained File” and browse to your data file.

- Choose the target schema or create a new one.

- Select the tables you want to import in the “Objects to Import” section.

- Click “Start Import” to begin the process.

Pros and cons of this method:

Pros:

- User-friendly interface

- Visual representation of import options

- Suitable for small to medium-sized datasets

Cons:

- Limited customization options

- May be slower for large datasets

- Requires MySQL Workbench installation

Best practices for efficient imports using the wizard:

- Ensure your data file is properly formatted and free of errors.

- Use consistent data types across your source file and target table.

- Import data in smaller batches if dealing with large datasets.

- Disable foreign key checks temporarily for faster imports.

- Create indexes after importing data to improve performance.

Command Line Magic: Mastering mysqldump and mysqlimport

For those comfortable with command-line interfaces, mysqldump and mysqlimport are powerful tools for exporting and importing MySQL data.

Detailed instructions for using mysqldump and mysqlimport:

Using mysqldump to export data:

- Open your terminal or command prompt.

- Use the following command structure:

mysqldump -u [username] -p [database_name] > [filename.sql] - Enter your password when prompted.

Using mysqlimport to import data:

- Prepare your data file (CSV, TSV, or other supported formats).

- Use the following command structure:

mysqlimport -u [username] -p –local [database_name] [filename.txt] - Enter your password when prompted.

Scenarios where command-line tools are most effective:

- Automating regular backups and restores

- Migrating large databases between servers

- Integrating database operations into scripts or cron jobs

- Performing quick imports or exports without a GUI

Tips for automating imports using shell scripts:

Create a shell script with your mysqlimport command:

bash

#!/bin/bash

mysqlimport -u [username] -p[password] –local [database_name] [filename.txt]

Make the script executable:

chmod +x import_script.sh

Schedule the script using cron:

0 2 * * * /path/to/import_script.sh

This setup will run your import script daily at 2 AM.

LOAD DATA INFILE: Turbocharge Your CSV Imports

The LOAD DATA INFILE command is a high-performance option for importing large volumes of data from CSV or text files directly into MySQL tables.

Explanation of the LOAD DATA INFILE syntax and usage:

Basic syntax:

LOAD DATA INFILE ‘[file_path]’

INTO TABLE [table_name]

FIELDS TERMINATED BY ‘,’

ENCLOSED BY ‘”‘

LINES TERMINATED BY ‘\n’

IGNORE 1 ROWS;

Usage example:

LOAD DATA INFILE ‘/path/to/data.csv’

INTO TABLE customers

FIELDS TERMINATED BY ‘,’

ENCLOSED BY ‘”‘

LINES TERMINATED BY ‘\n’

IGNORE 1 ROWS;

Performance benefits for large dataset imports:

- Faster than INSERT statements for bulk data

- Minimal overhead, as it bypasses the query parsing stage

- Efficient memory usage, reading data directly from the file

Security considerations and best practices:

- Use LOCAL keyword when importing from client-side files

- Ensure proper file permissions on the server

- Validate and sanitize data before import to prevent SQL injection

- Use IGNORE or REPLACE options to handle duplicate key errors

Example scenarios where LOAD DATA INFILE excels:

- Importing large CSV files from data exports

- Regular updates of product catalogs or inventory lists

- Bulk loading of log files or transaction records

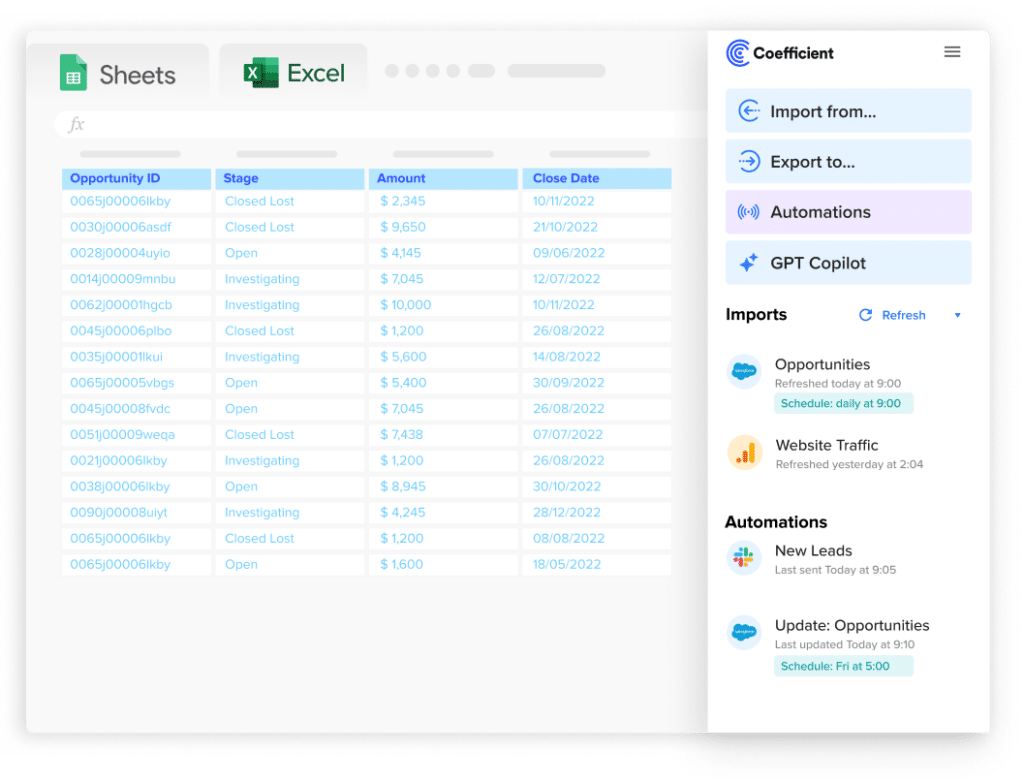

How Coefficient can streamline CSV data imports

Coefficient allows you to connect MySQL data directly to Google Sheets and Excel, providing an easy way to import and export MySQL data from spreadsheets.

- Set up automated data imports from MySQL to spreadsheets

- Schedule regular updates to keep your data fresh

- Use custom SQL queries for precise data extraction

This integration enables real-time data access and analysis, bridging the gap between bulk imports and live data management.

Programmatic Imports: MySQL Connectors and APIs

For developers and data engineers, programmatic imports using MySQL connectors and APIs offer flexibility and integration capabilities.

Overview of popular MySQL connectors:

Python (mysql-connector-python):

import mysql.connector

cnx = mysql.connector.connect(user=’username’, password=’password’,

host=’127.0.0.1′, database=’mydatabase’)

cursor = cnx.cursor()

query = (“INSERT INTO customers (name, email) VALUES (%s, %s)”)

data = (“John Doe”, “[email protected]”)

cursor.execute(query, data)

cnx.commit()

cursor.close()

cnx.close()

Java (MySQL Connector/J):

import java.sql.*;

public class MySQLImport {

public static void main(String[] args) {

String url = “jdbc:mysql://localhost:3306/mydatabase”;

String user = “username”;

String password = “password”;

try (Connection conn = DriverManager.getConnection(url, user, password);

PreparedStatement pstmt = conn.prepareStatement(“INSERT INTO customers (name, email) VALUES (?, ?)”)) {

pstmt.setString(1, “John Doe”);

pstmt.setString(2, “[email protected]”);

pstmt.executeUpdate();

} catch (SQLException e) {

e.printStackTrace();

}

}

}

PHP (mysqli):

<?php

$mysqli = new mysqli(“localhost”, “username”, “password”, “mydatabase”);

if ($mysqli->connect_errno) {

echo “Failed to connect to MySQL: ” . $mysqli->connect_error;

exit();

}

$stmt = $mysqli->prepare(“INSERT INTO customers (name, email) VALUES (?, ?)”);

$stmt->bind_param(“ss”, $name, $email);

$name = “John Doe”;

$email = “[email protected]”;

$stmt->execute();

$stmt->close();

$mysqli->close();

?>

Bulk Import Strategies: Optimize Performance for Large Datasets

When dealing with large volumes of data, optimizing your import process becomes crucial. Here are some strategies to improve import speed and efficiency.

Techniques for improving import speed with large volumes of data:

Batch inserts: Instead of inserting rows one by one, group them into batches. This reduces the number of database transactions and improves overall performance. Example in Python:

import mysql.connector

cnx = mysql.connector.connect(user=’username’, password=’password’,

host=’127.0.0.1′, database=’mydatabase’)

cursor = cnx.cursor()

query = “INSERT INTO customers (name, email) VALUES (%s, %s)”

data = [

(“John Doe”, “[email protected]”),

(“Jane Smith”, “[email protected]”),

Stop exporting data manually. Sync data from your business systems into Google Sheets or Excel with Coefficient and set it on a refresh schedule.

Get Started

(“Bob Johnson”, “[email protected]”)

]

cursor.executemany(query, data)

cnx.commit()

cursor.close()

cnx.close()

Disable autocommit: Turn off autocommit and manually commit after a batch of inserts to reduce I/O operations.

cnx.autocommit = False

# Perform batch inserts

cnx.commit()

Disable indexes during import: Temporarily disable indexes before the import and rebuild them afterward to speed up the insert process.

ALTER TABLE customers DISABLE KEYS;

— Perform bulk insert

ALTER TABLE customers ENABLE KEYS;

Partitioning and indexing strategies for efficient data loading:

Table partitioning: Divide large tables into smaller, more manageable pieces based on a partition key.

sql

CREATE TABLE orders (

id INT,

order_date DATE,

customer_id INT

)

PARTITION BY RANGE (YEAR(order_date)) (

PARTITION p0 VALUES LESS THAN (2020),

PARTITION p1 VALUES LESS THAN (2021),

PARTITION p2 VALUES LESS THAN (2022),

PARTITION p3 VALUES LESS THAN MAXVALUE

);

Delayed indexing: Create indexes after data import to avoid the overhead of index updates during the insert process.

sql

— After bulk insert

CREATE INDEX idx_customer_id ON orders (customer_id);

Comparison of bulk insert methods for different scenarios:

- LOAD DATA INFILE: Best for loading large CSV files directly into MySQL.

- Batch inserts: Suitable for programmatic imports with moderate data volumes.

- INSERT … SELECT: Efficient for ing data between tables within the same database.

- mysqldump and mysqlimport: Ideal for database migrations or full table imports.

Data Validation and Integrity: Ensure Accurate Imports

Maintaining data quality and integrity is crucial when importing data into MySQL. Let’s explore some strategies and tools to ensure your imported data is accurate and consistent.

Importance of data validation during the import process:

Data validation helps prevent errors, maintain data quality, and ensure the reliability of your database. It’s essential to validate data both before and during the import process to catch issues early and maintain the integrity of your MySQL database.

Key aspects of data validation include:

- Data type checking

- Range validation

- Format validation (e.g., email addresses, phone numbers)

- Uniqueness checks for primary keys

- Referential integrity for foreign keys

Tools and techniques for data quality assurance post-import:

MySQL’s CHECK constraints: Use CHECK constraints to enforce data validation rules directly in your table definition.

CREATE TABLE employees (

id INT PRIMARY KEY,

name VARCHAR(100) NOT NULL,

email VARCHAR(100),

age INT,

CHECK (age >= 18 AND age <= 65)

);

Stored procedures for validation: Create stored procedures to perform complex validation checks on imported data.

DELIMITER //

CREATE PROCEDURE validate_employee_data()

BEGIN

— Check for duplicate email addresses

SELECT email, COUNT(*) as count

FROM employees

GROUP BY email

HAVING count > 1;

— Check for invalid age ranges

SELECT id, name, age

FROM employees

WHERE age < 18 OR age > 65;

END //

DELIMITER ;

Data profiling tools: Use data profiling tools like MySQL Workbench’s Data Export and Validation feature to analyze your imported data for patterns, anomalies, and potential issues.

Custom scripts for data auditing: Develop custom scripts to perform regular audits of your imported data, checking for inconsistencies or violations of business rules.

Importing Data into MySQL

Choosing the right import method depends on your specific needs, data volumes, and real-time requirements. For small, one-time imports, the MySQL Workbench Import Wizard might be sufficient. For large-scale, recurring imports, LOAD DATA INFILE or bulk insert strategies combined with proper indexing and partitioning can significantly improve performance.

Remember, efficient data importing is just the beginning. The real value comes from how you use and analyze that data to drive business decisions. With Coefficient’s MySQL connector, you can seamlessly connect your imported data to familiar spreadsheet tools, enabling real-time analysis and collaborative decision-making.

Ready to take your MySQL data management to the next level? Learn more about Coefficient’s data connectivity solutions and start optimizing your data workflows today.